Playing railway with AppIntents Toolbox

Etienne Vautherin - 2024/10/11

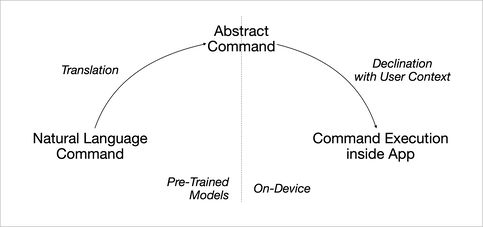

Apple Intelligence allows the user to take actions in natural language on their device. This seems natural, of course, yet such an interaction introduces a major innovation: when they are not visual, the actions on a device are usually exposed in languages (Unix shell script, Apple Shortcuts, etc.) that have many qualities but certainly not the one of being ‘natural’! 1

Apple Intelligence thus offers a transformation system that allows commands expressed in natural language to be translated into the execution of actions. This transformation is made possible thanks to the advancements achieved with Large Language Models (LLMs). However, Apple has added two very ambitious goals to this:

- Performing this transformation on-device (without communication with a server) to ensure that the user’s data remains private and secure.

- Enabling third-party applications to offer such actions executable in natural language.

The specific domains

To meet these objectives, Apple has chosen to divide the set of possible transformations into specific domains. Each domain is thus associated with a pre-trained model that is compatible with the processing power and memory of an iPhone. The choice of these domains reflects a compromise between:

- The breadth of the domain: the larger the domain, the more frequently it is used, the more natural language sentence examples are available to train the model, the more relevant the model will be. We should not forget that we are talking about Large Language Models here.

- The targeting of a specific feature, which allows the model size to be reduced.

The breakdown into specific domains is currently as follows:

- Books for ebook and audiobook

- Browser for web browsing

- Camera

- Document reader for document viewing and editing

- File management

- Journaling

- Photos and videos

- Presentations

- Spreadsheets

- System and in-app search

- Whiteboard

- Word processor and text editing

This breakdown will likely evolve, if only to cover all needs. Indeed, as of today, essential concepts such as locations, contacts, or the calendar are not taken into account. This absence could mean two things:

- These concepts are so important in a user’s daily tasks that Apple wants to optimize their definition before fixing them into a domain.

- These concepts are so fundamental that a third-party application will never be able to replace the system in handling them.

The coming months will provide answers on this point…

Abstract command and schema

The characteristic of these models is that they are common to all users, regardless of the apps installed on the device. The model exists even before an app can expose its features. In fact, if you are reading these lines, it’s possible that your app may still only exist in your imagination!

We therefore assume that the transformation performed by each of these models first produces a translation into an abstract command (i.e., a translation independent of the apps actually available). This abstract command is then translated into a call to an action exposed by an app actually installed on the device. If this translation is impossible, Siri will look for another response, outside of the available apps.

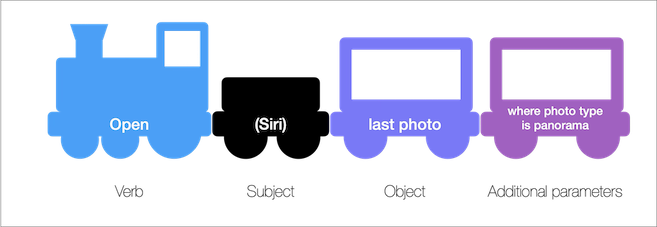

Let’s now focus on what such an abstract command might be. This command comes from a sentence in natural language. The structure of this sentence has been conceptualized for centuries through the definition of the grammar of the user’s language. A sentence in natural language thus contains:

- At least one verb. In this case, the verb is conjugated in the imperative, as the user is commanding the execution of a task: “Open”.

- A subject. Here, the subject is Siri since the sentence is addressed to this assistant. However, in an imperative sentence, the subject is usually implied: “(Siri) open”.

- The object of the action, if it is not implicitly described by the verb: “Open last photo”.

- Additional optional parameters: “Open last photo where photo type is panorama”.

The structure of such a sentence in natural language can then be represented with these elements:

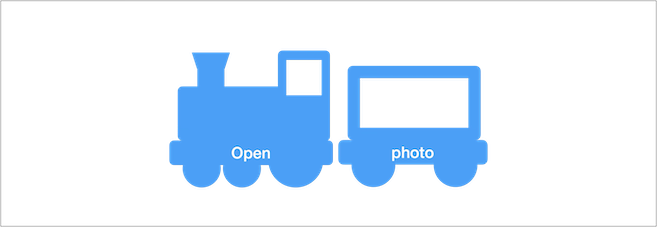

This is, of course, a simplified representation (and even a bit childish!). Apple Intelligence doesn’t use trains but embedded vectors to calculate the semantic proximity of two elements 2. For the rest of this explanation, we will further simplify this representation by removing the subject and keeping only the verb and the main object (we will handle the case of additional parameters later).

We now have a train representing the concept of “Open photo”. Its domain is “Photos and videos”. Within this domain, the verb is “Open” and the object is of type “Asset Photo”.

Each specific domain thus defines:

- a set of verbs such as “Open”, “Delete”, etc.

- a set of objects such as “Asset”, “Album”, etc.

- a set of definitions for possible values. For example, “Photo” or “Video” to specify the type of asset.

Apple calls each of these elements in the sets a schema. In this railway representation, a specific color is assigned to each domain to better visualize where the different schemas belong to.

Thus developer’s playground is like this magnificent railway system.

If an action in an app cannot be described by the schemas provided by this set, it is still possible to expose it: Siri allows the definition of template phrases to designate an action.

This Lego train represents a template phrase designating a feature outside of any domain. Such a train is assembled by the developer 3. It cannot be commanded in fully natural language; however, it will be recognized and launched by Siri to execute the specific feature of an app.

The AppIntents framework

All of this is very cute, but as a developer, I no longer play with toy trains—I code in Swift!

That’s why we are now going to dive into the AppIntents framework. In this framework, the domains are declared in the form of an enumeration, with each value corresponding to a “model” (AssistantSchemas.Model). Thus, we find:

- the

.photosmodel for the Photos and Videos domain, - the

.mailmodel for the Email domain, - etc.

Each of these models in turn declares properties, with each property corresponding to a schema. For example, .photos declares the following properties:

openAsset,deleteAssets, etc. Each of them is associated to a verb concept.album,assetandrecognizedPerson. Each of them is associated to an object concept.assetType,filterType, etc. Each of them is associated to an enumeration concept.

These associated concepts are declared as protocols:

AssistantSchemas.Intentfor the verb concept,AssistantSchemas.Entityfor the object concept,AssistantSchemas.Enumfor the enumeration concept.

To simplify, we will now refer to Intent, Entity, or Enum schemas. For example:

.photo.openAssetis anIntentschema,.photo.assetis anEntityschema,.photo.assetTypeis anEnumschema.

These schemas make up the abstract command, obtained through a translation of natural language.

The executed command consists of structures declared by the app. In these structures, the AppIntent protocol plays a key role. That is where the framework gets its name from.

It is, in particular, the perform() function of AppIntent that implements the execution of the command. The parameters necessary for the command are declared as properties by AppIntent.

For example, OpenIntent is an AppIntent specialized in opening an element of the app. OpenIntent thus declares the property var target to designate the element to open.

The sample code Making your app’s functionality available to Siri can open an object of type AssetEntity. It declares the structure OpenAssetIntent as follows:

struct OpenAssetIntent: OpenIntent {

var target: AssetEntity

...

func perform() async throws -> some IntentResult {

// Code opening target

...

}

}

To indicate that the type AssetEntity represents an object of the app, this structure adopts the AppEntity protocol.

Thus, all the elements that make up a command are defined by adopting the corresponding protocol:

- verb:

AppIntent - object:

AppEntity - set of values:

AppEnum

Just as OpenIntent declares a specialized AppIntent, the framework also declares specializations for AppEntity. For example, IndexedEntity represents an object that is tracked by Apple Intelligence’s semantic index. We will return to this aspect later and more generally to the AppEntity protocol.

The sample code thus declares the structure AssetEntity as follows:

struct AssetEntity: IndexedEntity {

...

}

We now have all the declarations needed to associate the elements of the executed command with the elements of the abstract command invoked through natural language. This association is achieved using macros from the framework:

- macro for a verb, associating

AppIntentwith anIntentschema:@AssistantIntent(schema: ...) - macro for an object, associating

AppEntitywith anEntityschema:@AssistantEntity(schema: ...) - macro for a set of values, associating

AppEnumwith anEnumschema:@AssistantEnum(schema: ...)

Here are these macros implemented in the sample code:

@AssistantIntent(schema: .photos.openAsset)

struct OpenAssetIntent: OpenIntent {

...

}

@AssistantEntity(schema: .photos.asset)

struct AssetEntity: IndexedEntity {

...

}

At the heart of the AppIntents Toolbox

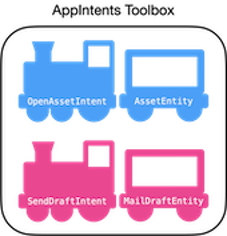

When these structures are associated with schemas, they are referenced by Apple Intelligence in the AppIntents Toolbox during the installation of the app.

A different app that declares other structures will also be referenced in the AppIntents Toolbox.

@AssistantIntent(schema: .mail.sendDraft)

struct SendDraftIntent: AppIntent {

var target: MailDraftEntity

...

}

@AssistantEntity(schema: .mail.draft)

struct MailDraftEntity {

...

}

Once natural language is translated into schemas, Apple Intelligence retrieves the structures corresponding to these schemas in the AppIntents Toolbox.

It then just needs to init OpenAssetIntent with the corresponding value for the target parameter and call its perform() function.

The evaluation of this target value is delegated to Apple Intelligence’s semantic index. This is something we will detail soon.

Reactions and comments about this article

-

With HyperTalk, in 1987, Apple was already offering users the ability to run functions in the most natural language possible. HyperTalk is the language of HyperCard, that is such an innovative software that it inspired the Web! The creator of HyperCard is the brilliant Bill Atkinson. In 1991, the language AppleScript continued the philosophy of HyperTalk, generalizing it to all Mac applications. Even today, it is possible to execute scripts written with this language on your Mac! ↩︎

-

REPRESENTING TOKENS WITH VECTORS, in How GPT Works, offers an excellent introduction to the concept of semantic space. ↩︎

-

The definition of phrases describing a functionality is achieved through the App Shortcuts. ↩︎